Offload Shiny's Workload: COVID-19 processing for the WHO/Europe

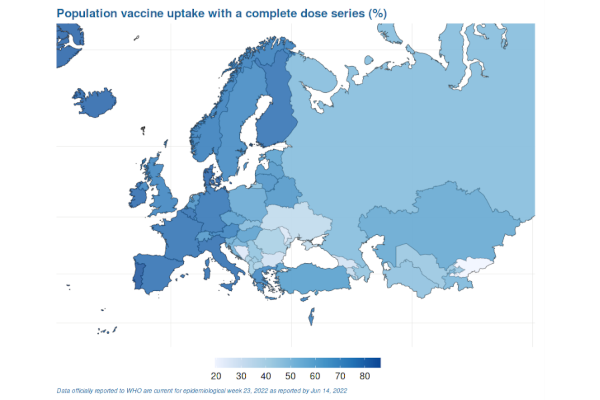

At Jumping Rivers, we have a wealth of experience developing and maintaining Shiny applications. Over the past year, we have been maintaining a Shiny application for the World Health Organization Europe (WHO/Europe) that presents data about COVID-19 vaccination uptake across Europe.

The great strength of Shiny is that it simplifies the production of data-focused web applications, making it relatively easy to present data to users / clients in an interactive way. However data can be big and data-processing can be complex, time-consuming and memory-hungry. So if you bake an entire data pipeline into a Shiny application, you may end up with an application that is costly to host and doesn’t provide the best user experience (slow, frequently crashes).

One of the best tips for ensuring your application runs smoothly is simple:

Do as little as possible.

That is … make sure your application does as little as possible.

The data upon which the application is based comes from several sources, across multiple countries, is frequently updated, and is constantly evolving. When we joined this project, the integration of these datasets was performed by the application itself. This meant that when a user opened the app, multiple large datasets were downloaded, cleaned up, and combined together—a process that might take several minutes—before the user could see the first table.

Do as little processing as possible

A simple data-driven app may look as follows: It downloads some data, processes that data and then presents a subset of the raw and processed data to the user.

The initial data processing steps may make the app very slow, and if it is really sluggish, may mean that users close the app before it fully loads.

Since the data processing pipeline is encoded in the app, a simple way to improve speed is for the app to cache any processed data. With the cached, processed data in place, for most users the app would only need to download or import the raw and the processed data—alleviating the need for any data processing while the app is running. But, suppose the raw data had updated. Then when the next user opens the app, the data-processing and uploading steps would run. Though that user would have a poor experience, most users wouldn’t.

This was the structure of the WHO/Europe COVID-19 vaccination programme monitoring application before we started working with it. Raw and processed data were stored on an Azure server and the app ensured that the processed data was kept in-sync with any updates to the raw data. The whole data pipeline was only run a few times a week, because the raw datasets were updated on a weekly basis. The load time for a typical user was approximately 1 minute, whereas the first user after the raw data had been updated may have to wait 3 or 4 minutes for the app to load.

Transfer as little data as possible

Data is slow. So if you need lots of it, keep it close to you, and make sure you only access the bits that you need.

There is a hierarchy of data speeds. For an app running on a server, data-access is fastest when stored in memory, slower when stored on the hard-drive, and much, much slower when it is accessed via the internet. So, where possible, you should aim to store the data that is used within an app on the server(s) from which the app is deployed.

With Shiny apps, it is possible to bundle datasets alongside the source code, such that wherever the app is deployed, those datasets are available. A drawback of coupling the source code and data in this way, is that the data would need to be kept in version control along with your source code, and a new deployment of the app would be required whenever the data is updated. So for datasets that are frequently updated (as for the vaccination counts that underpin the WHO/Europe app), this is impractical. But storing datasets alongside the source code (or in a separate R package that is installed on the server) may be valuable if those datasets are unlikely to change during the lifetime of a project.

For datasets that are large, or are frequently updated, cloud storage may be the best solution. This allows collaborators to upload new data on an ad-hoc basis without touching the app itself. The app would then download data from the cloud for presentation during each user session.

That solution might sound mildly inefficient. For each user that opens the app, the same datasets are downloaded—likely, onto the same server. How can we make this process more efficient? There are some rather technical tips that might help—like using efficient file formats to store large datasets, cacheing the data for the app’s landing page, or using asynchronous computing to initiate downloading the data while presenting a less data-intensive landing page.

A somewhat less technical solution is to identify precisely which datasets are needed by the app and only download them.

Imagine the raw datasets could be partitioned into:

- those that are only required when constructing a (possibly smaller) processed dataset that is presented by the app; and

- those that are actually presented by the app.

If this can be done, there won’t be any difference when the app runs the whole data processing pipeline, both sets of raw data would still be downloaded, and the processed data would be uploaded to the cloud. But for most users the app would only download the processed datasets and the second set of raw datasets.

In the COVID-19 vaccination programme monitoring app, evolving to this state meant that two large files (~ 50MB in total) were no longer downloaded per user session.

Do as little processing as possible … in the app

In the above, we showed some steps that should reduce the amount of processing and data transfer for a typical user of the app. With those changes, the data processing pipeline was still inside the app. This is undesirable. For some users, the whole data processing pipeline will run during their session, which makes for a poor user experience. But it also means that some user sessions require considerably greater memory and data transfer requirements than others. If the data pipeline could run outside of the app, these issues would be eased.

If we move the data pipeline outside of the app, where should we move it? It is possible to run processing scripts in a few places. For this project, we chose to run the data processing pipeline on GitHub on a daily schedule, as part of a GitHub Actions workflow. This was simply because the source code is hosted there. GitLab, Bitbucket, Azure and many other providers can run scripts in a similar way.

So now, the data used within the app is processed on GitHub and uploaded to Azure before it is needed by the app.

The combination of these changes meant that the WHO/Europe COVID-19 vaccination programme monitoring app, which previously took ~ 1min (and occasionally ~ 4min) to load, now takes a matter of seconds.

What complexities might this introduce

In the simplest app presented here, all data processing was performed whenever a new user session was started. The changes described have made the app easier to use (from the user’s perspective) but mean that for the developers, coordination between the different components must be managed.

For example, if the data team upload some new raw data, there should be a mechanism to ensure that that data gets processed and into the app in a timely manner. If the source code for the app or the data processing pipeline change, the data processing pipeline should run afresh. If changes to the structure of the raw dataset mean that the data processing pipeline produces malformed processed data, there should be a way to log that.

Summary

Working with the WHO/Europe COVID-19 vaccination programme monitoring app has posed several challenges. The data upon which it is based is constantly updated and has been restructured several times, consistent with the challenges that the international community has faced. Here we’ve outlined some steps that we followed to ensure that the data underpinning this COVID-19 vaccination programme monitoring app is presented to the community in an up-to-date and easy to access way. To do this, we streamlined the app—making it do as little as possible when a user is viewing it—by downloading only what it needs, and by removing any extensive data processing.