Python API deployment with RStudio Connect: FastAPI

This is part two of our three part series

- Part 1: Python API deployment with RStudio Connect: Flask

- Part 2: Python API deployment with RStudio Connect: FastAPI (this post)

- Part 3: Python API deployment with RStudio Connect: Streamlit

RStudio Connect is a platform which is well known for providing the ability to deploy and share R applications such as Shiny apps and Plumber APIs, as well as plots, models and R Markdown reports. However, despite the name, it is not just for R developers. RStudio Connect also supports a growing number of Python applications, including Flask and FastAPI.

FastAPI is a light web framework and as you can probably tell by the name, it’s fast. It provides a similar functionality to Flask in that it allows the building of web applications and APIs, however it is newer and uses the ASGI (Asynchronous Server Gateway Interface) framework. One of the nice features of FastAPI is it is built on OpenAPI and JSON Schema standards which means it has the ability to provide automatic interactive API documentation with SwaggerUI. You also get validation for most Python data types with Pydantic. FastAPI is therefore another popular choice for data scientists when creating APIs to interact with and visualize data.

In this blog post we will go through how to deploy a simple machine learning API to RStudio Connect.

First steps

First of all we need to create a project directory and install FastAPI. Unlike Flask, FastAPI doesn’t have an inbuilt web server implementation. Therefore, in order to run our app locally, we will also need to install an ASGI server such as uvicorn,

# create a project repo

mkdir fastapi-rsconnect-blog && cd fastapi-rsconnect-blog

# create and source a new virtual environment

python -m venv .venv

source .venv/bin/activate

# install FastAPI and uvicorn

pip install fastapi

pip install "uvicorn[standard]"

Lets start with a basic “hello world” app which we will create in a file called fastapi_hello.py.

touch fastapi_hello.py

Our hello world app in FastAPI will look something something like,

# fastapi_hello.py

from fastapi import FastAPI

# Create a FastAPI instance

app = FastAPI()

@app.get("/")

async def root():

return {"message": "Hello World"}

This can be run with,

uvicorn fastapi_hello:app --reload

You will get an output with the link to where your API is running.

INFO: Uvicorn running on http://127.0.0.1:8000 (Press CTRL+C to quit)

You can check it is working by navigating to http://127.0.0.1:8000 in your browser.

Example ML API

Now for a slightly more relevant example! In the pipeline of a data science project, a crucial step is often deploying your model so that it can be used in production. In this example we will use a simple API which allows access to the predictions of a model. Details on the model and creating APIs with FastAPI are beyond the scope of this blog, however this allows for a more interesting demo than “hello world”. If you are getting started with FastAPI this tutorial covers most of the basics.

We will need to install a few more packages in order to run this example.

pip install scikit-learn joblib numpy

Our demo consists of a train.py script which trains a few machine learning models on the classic Iris dataset and saves the fitted models to .joblib files. We then have a fastapi_ml.py script where we build our API. This loads the trained models and uses them to predict the species classification for a given iris data entry.

First we will need to create the script in which we will train our models:

touch train.py

and then copy in the code below.

# train.py

import joblib

import numpy as np

from sklearn.datasets import load_iris

from sklearn.naive_bayes import GaussianNB

from sklearn.neighbors import KNeighborsClassifier

from sklearn.svm import SVC

# Load in the iris dataset

dataset = load_iris()

# Get features and targets for training

features = dataset.data

targets = dataset.target

# Define a dictionary of models to train

classifiers = {

"GaussianNB": GaussianNB(),

"KNN": KNeighborsClassifier(3),

"SVM": SVC(gamma=2, C=1),

}

# Fit models

for model, clf in classifiers.items():

clf.fit(features, targets)

with open(f"{model}_model.joblib", "wb") as file:

joblib.dump(clf, file)

# Save target names

with open("target_names.txt", "wb") as file:

np.savetxt(file, dataset.target_names, fmt="%s")

We will then need to run this script with:

python train.py

You should now see a target_names.txt file in your working directory. Along with the <model name>_model.joblib files.

Next we will create a file for our FastAPI app,

touch fastapi_ml.py

and copy in the following code to build our API.

# fastapi_ml.py

from enum import Enum

import joblib

import numpy as np

from fastapi import FastAPI

from pydantic import BaseModel

from sklearn.datasets import load_iris

from sklearn.naive_bayes import GaussianNB

from sklearn.neighbors import KNeighborsClassifier

from sklearn.svm import SVC

# Create an Enum class with class attributes with fixed values.

class ModelName(str, Enum):

gaussian_nb = "GaussianNB"

knn = "KNN"

svm = "SVM"

# Create a request body with pydantic's BaseModel

class IrisData(BaseModel):

sepal_length: float

sepal_width: float

petal_length: float

petal_width: float

# Load target names

with open("target_names.txt", "rb") as file:

target_names = np.loadtxt(file, dtype="str")

# Load models

classifiers = {}

for model in ModelName:

with open(f"{model.value}_model.joblib", "rb") as file:

classifiers[model.value] = joblib.load(file)

# Create a FastAPI instance

app = FastAPI()

# Create a POST endpoint to receive data and return the model prediction

@app.post("/predict/{model_name}")

async def predict_model(data: IrisData, model_name: ModelName):

clf = classifiers[model_name.value]

test_data = [

[data.sepal_length, data.sepal_width, data.petal_length, data.petal_width]

]

class_idx = clf.predict(test_data)[0] # predict model on data

return {"species": target_names[class_idx]}

We can run the server locally with,

uvicorn fastapi_ml:app --reload

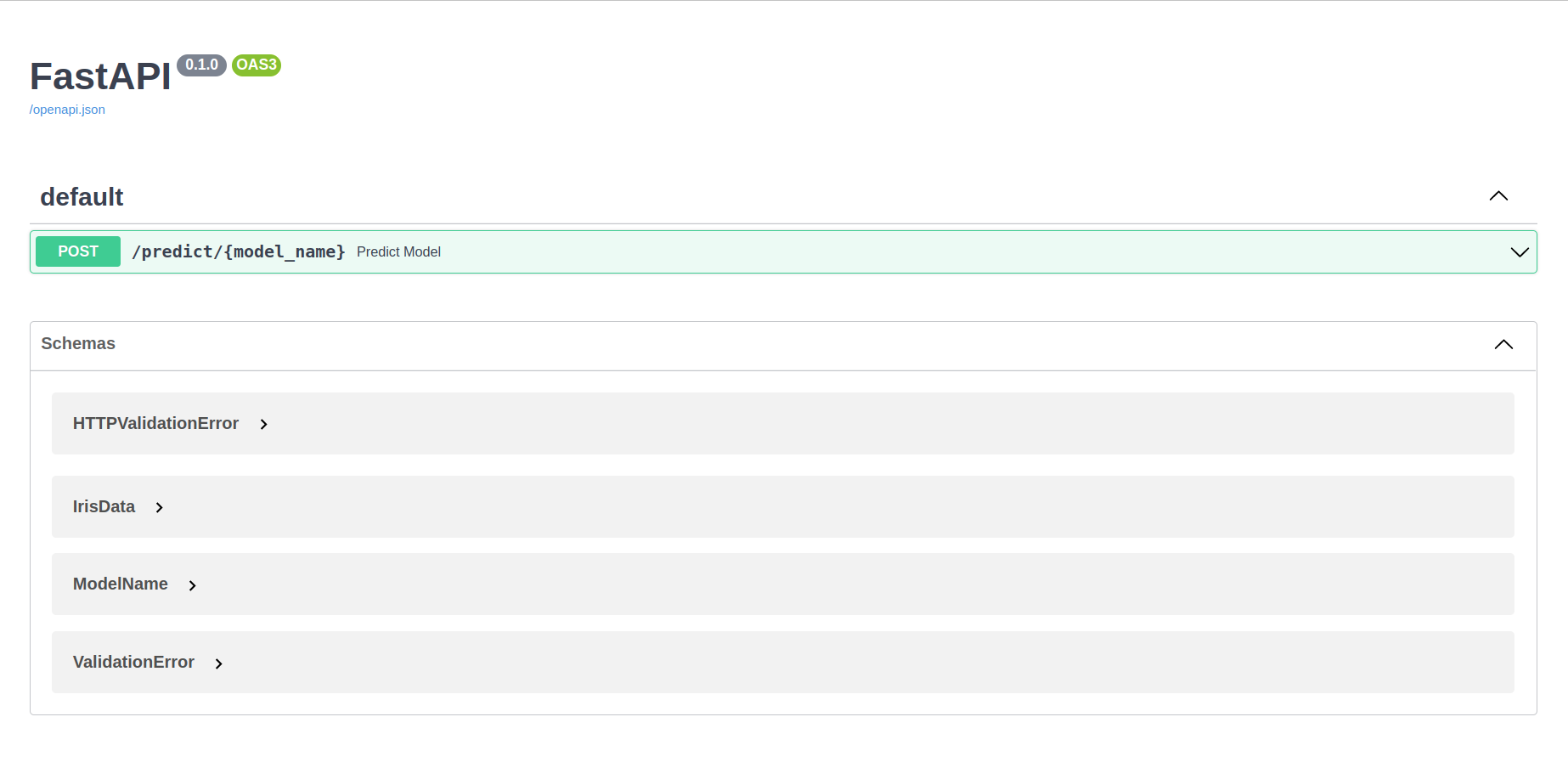

Now if you go to http://127.0.0.1:8000/docs, you should see a page that looks like this,

You can test out your API by selecting the ‘POST’ dropdown and then clicking ‘Try it out’.

You can also test the response of your API for the KNN model with,

curl -X 'POST' 'http://127.0.0.1:8000/predict/KNN' -H 'accept: application/json' -H 'Content-Type: application/json' -d '{"sepal_length": 0,"sepal_width": 0,"petal_length": 0,"petal_width": 0}'

You can get the response for the other models by replacing ‘KNN’ with their names in the path.

Deploying to RStudio Connect

Deploying a FastAPI app to RStudio Connect is very similar to deploying a Flask app. First of all, we need to install rsconnect-python, which is the CLI tool we will use to deploy.

pip install rsconnect-python

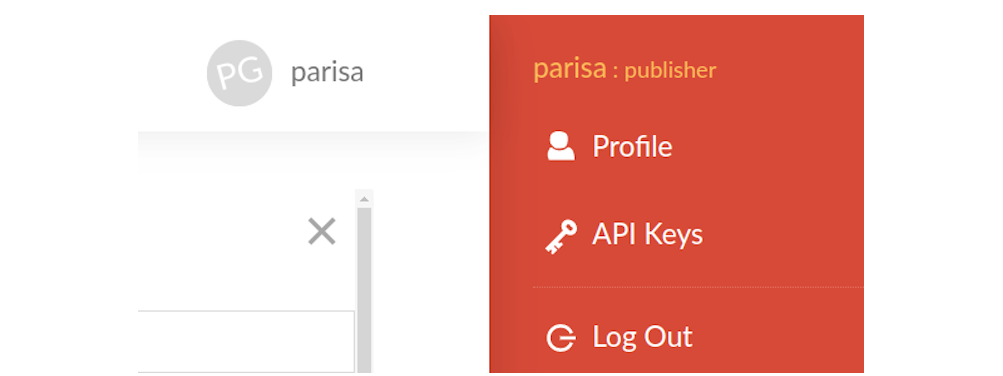

If you have not done so already, you will need to add the server that you wish to deploy to. The first step is to create an API key. Log into RStudio Connect and click on your user icon in the top left corner, navigate to “API Keys” and add a new API key.

Remember to save the API key somewhere as it will only be shown to you once!

It is also useful to set an API key environment variable in our .env file. This can be done by running

echo 'export CONNECT_API_KEY=<your_api_key>' >> .env

source .env

If you wish you could also add an environment variable for the server you are using,

CONNECT_SERVER=<your server url>

Note the server url will be the part of the url that comes before connect/ and must include a trailing slash.

Now we can add the server with,

rsconnect add --server $CONNECT_SERVER --name <server nickname> --api-key $CONNECT_API_KEY

You can check the server has been added and view its details with

rsconnect list

Before we deploy our app, there is one more thing to watch out for. Unless you have a requirements.txt file in the same directory as your app, RStudio Connect will freeze your current environment. Therefore, make sure you run the deploy command from the virtual environment which you created your FastAPI app in and wish it to run in on the server.

Aside When writing this blog I found there is a bug in pip/ubuntu which adds pkg-resources==0.0.0 when freezing the environment. This causes an error when trying to deploy. To get around this you can create a requirements.txt file for your current environment and exclude pkg-resources==0.0.0 with

pip freeze | grep -v "pkg-resources" > requirements.txt

When we deploy we will also need to tell RStudio Connect where our app is located. We do this with an --entrypoint flag which is of the form module name:object name. By default RStudio Connect will look for an entrypoint of app:app.

We are now ready to deploy our ML API by running,

rsconnect deploy fastapi -n <server nickname> . --entrypoint fastapi_ml:app

from the fastapi-deploy-demo directory.

Your ML API has now been deployed! You can check the deployment by following the output links which will take you to your API on RStudio Connect.

Further reading

We hope you found this post useful!

If you wish to learn more about FastAPI or deploying applications to RStudio Connect you may be interested in the following links: