End-to-end testing with shinytest2: Part 2

This is the second of a series of three blog posts about using the {shinytest2} package to develop automated tests for shiny applications. In the posts we will cover

how to write and run a simple test using {shinytest2} (this post) ;

how best to design your test code so that it supports your future work.

Here, we will write a simple shiny app (as an R package) and show how to generate tests for this app using {shinytest2}. As discussed in the previous post, {shinytest2} tests your app as if a user was interacting with it in their browser. The tests generated are application-focussed rather than component-focussed and so give some overall guarantees on how the app should behave.

This post is slightly more technical than the last, and assumes that the reader is comfortable with creating and unit-testing packages in R, and with shiny development in general.

The packages used in this post can be installed as follows:

install.packages(

c("devtools", "leprechaun", "shiny", "shinytest2", "testthat", "usethis")

)

Initialising a shiny app as a package using leprechaun

At Jumping Rivers, our shiny applications are developed as packages. The one used here was generated with {leprechaun}. The workflow for building apps using leprechaun has three main steps.

First, initialise a new package:

# Create a new R package in the directory "shinyGreeter"

usethis::create_package("shinyGreeter")

Second, add a shiny app skeleton using leprechaun’s scaffold()

function (all subsequent code is evaluated inside the “shinyGreeter”

directory, created above):

# Add a basic shiny app and leprechaun-derived helper functions etc

leprechaun::scaffold()

Those two steps add all the code for a working (if basic) shiny app. To

run the app requires some additional steps: documenting and loading the

new package. Note that ‘documenting’ the package also ensures that any

dependencies will be made available to the loaded package (for us, if we

ran this app without having called document() it would fail to find

the shinyApp() function from {shiny}).

# Document the package dependencies etc

devtools::document()

# Load the package

devtools::load_all()

# Load (alternative):

# - install the "shinyGreeter" package

# - then use `library(shinyGreeter)`

Finally, to prove that we have created a working shiny app, we want to

run this skeleton app. For that purpose, leprechaun added a run()

function to our package as part of the scaffolding process (here, this

is shinyGreeter::run()). The running app doesn’t actually use the

leprechaun package at all.

# Start the app

run()

Please see the leprechaun documentation for further details on using this package to build your shiny applications.

Adding some testable functionality to the app

We still need something to test.

In a leprechaun app, the main UI and server functions are defined in

./R/ui.R and ./R/server.R, respectively. We will replace the code

that leprechaun added to these files as follows, to give us an app that

responds to user input.

# In ./R/ui.R

ui = function(req) {

fluidPage(

textInput("name", "What is your name?"),

actionButton("greet", "Greet"),

textOutput("greeting")

)

}

# In ./R/server.R

server = function(input, output, session) {

output$greeting <- renderText({

req(input$greet)

paste0("Hello ", isolate(input$name), "!")

})

}

The resulting app (which is based on an app in the {shinytest2} documentation) is a reactive “Hello, World!” app. The user enters their name into a text field and, after they click a button, the app prints out a “Hello ‘user’!” welcome message in response.

Since we have changed the source code, we have to reload the package prior to running the app. Then we can manually check that it behaves as expected.

devtools::document()

devtools::load_all()

run()

Adding shinytest2 infrastructure to our app

{shinytest2} provides a utility function, similar to those in {usethis}, that adds all the infrastructure needed for it’s use:

shinytest2::use_shinytest2()

Here, this adds some files to the ./tests/testthat/ directory and adds

{shinytest2} as a suggested dependency of the package.

{shinytest2} requires an entry point into the app-under-test, so that it

knows how to start the app. Typical shiny apps have a top-level app.R

file (or a combination of global.R, server.R and ui.R) which is

used to run the app. To add {shinytest2} tests to a package, you can

either

- add a top-level

app.Rfile (and so convert into a more typical shiny app structure), - add an

app.Rinto a subdirectory of./inst/, - or use a function (like the

run()function added by {leprechaun}) that returns the shiny app object

Here, we choose to use the latter approach. See the shinytest2 vignette for more details on how to use {shinytest2} with a packaged shiny application.

For those who have used {testthat}, the {shinytest2} test cases will

look familiar and are placed in the same ./tests/testthat/ directory

as any other {testthat} test scripts. The code for initialising,

interacting with, and assessing the state of your app is written inside

a test_that() block. Hence, a typical test case might look as follows

(the GIVEN / WHEN / THEN structure is a common pattern in test code, we

use it here to separate the different steps in the test):

test_that("a description of the behaviour that is under test here", {

# GIVEN: this background information about the app

# (e.g., the user has opened the app and navigated to the correct tab)

shiny_app = shinyGreeter::run()

app = shinytest2::AppDriver$new(shiny_app, name = "greeter")

# WHEN: the user performs these actions

# (e.g., enters their username in the text field)

... code to perform the actions ...

# THEN: the app should be in this state

# (e.g., a customised welcome message is displayed to the user)

... code to check that the app is in the correct state ...

})

Any user actions that we are replicating in our test are performed by

calling methods / functions on the AppDriver object (named app in

the above).

Recording your first test

A sensible first test for our app would be to ensure that when the user enters their name into the text box and clicks the button, a greeting is rendered on the screen. The simplest way to define this test is using the {shinytest2} test recorder.

To record a test using {shinytest2} you use shinytest2::record_test().

This works without hitch if there is an app.R or ui.R / server.R

combo in your working directory. Since we are working against a package,

we have to tell record_test() how to run our app:

devtools::load_all()

shinytest2::record_test(run())

This may lead {shinytest2} to install some additional packages (e.g., {shinyvalidate}, {globals}).

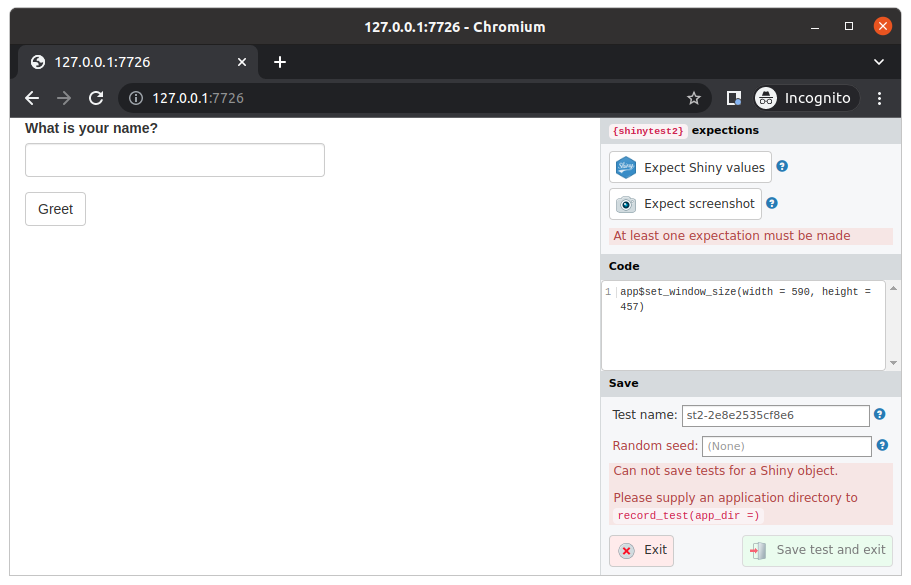

Eventually the app will open, with the {shinytest2} recorder panel alongside.

We note a few things: the test recorder warns that it “Can not save

tests for a shiny object”. This happened because we passed an App

object, rather than a directory, to record_test(). It’s nothing to

worry about though, because any code generated by {shinytest2} can be

copied from the code panel into a test script.

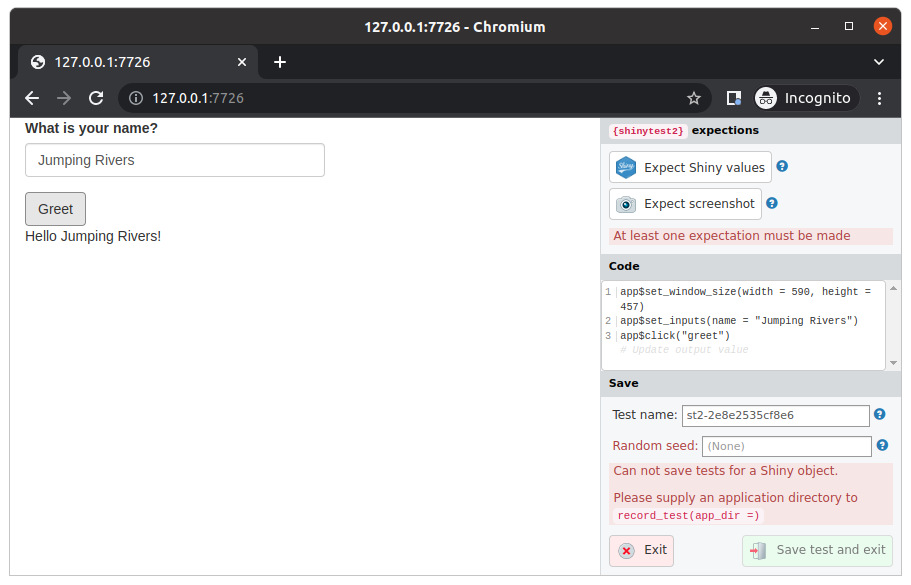

To test the app, we click on the “What is your name?” entry box, type “Jumping Rivers”, and then click on the “Greet” button.

We then click on the “Expect Shiny values” button in the test recorder panel.

A code snippet that looks like this is shown in the “code” panel.

app$set_window_size(width = 1619, height = 970)

app$set_inputs(name = "Jumping Rivers")

app$click("greet")

app$expect_values()

To turn that into a usable test, we create a test file in

./tests/testthat/ with a meaningful name. We’ll call it

test-e2e-greeter_accepts_username.R. The “e2e” in the file name

indicates that this is an “end-to-end” test (it tests against the

running app), and so distinguishes it from your unit tests and

testServer()-driven reactivity tests.

# Add the test script `./tests/testthat/test-e2e-greeter_accepts_username.R`

usethis::use_test("e2e-greeter_accepts_username")

We use the test skeleton described above:

# ./tests/testthat/test-e2e-greeter_accepts_username.R

test_that("the greeter app updates user's name on clicking the button", {

# GIVEN: The app is open

shiny_app = shinyGreeter::run()

app = shinytest2::AppDriver$new(shiny_app, name = "greeter")

app$set_window_size(width = 1619, height = 970)

# WHEN: the user enters their name and clicks the "Greet" button

app$set_inputs(name = "Jumping Rivers")

app$click("greet")

# THEN: a greeting is printed to the screen

app$expect_values()

})

(Note that the window-size command isn’t really part of the test, so we separate that from the test actions).

Running your first test

You’ve written a test. Now how do you run it?

# Method 1:

# - Type this in the console to load the package and run the tests in your R session

devtools::test()

# Method 2:

# - In RStudio, use the "Ctrl-Shift-T" shortcut to run the tests in a separate session

# Method 3:

# - Load the package

devtools::load_all()

# - and then run the tests

testthat::test_local()

Each of these approaches will load the current version of the package and run any tests found within it.

It’s important to know that when your tests run, the app and the tests

run in different R sessions from each other. devtools::test() and

partners load the ‘under-development’ version of your package into the

session where the tests run. But, that isn’t where the app runs. For our

simple app, the code above is sufficient to ensure that the

‘under-development’ version of the app is used when the tests run

(AppDriver$new(shinyGreeter::run()) passes the correct version of the

app to the app-session). In more complicated situations, you may need to

install your package prior to running the {shinytest2}-based tests

(please see the

documentation).

When the test initially runs, a couple of warnings will show up.

![Warnings are thrown when a snapshot test is first run. The console in which the tests have been run showing two warnings. 'the greeter app updates users name on clicking the button' and 'the greeter app updates users name on clicking the button, each followed by 'new file snapshot: tests/testthat/_snaps/greeter-001_.png' The final lines are 'Results, Duration: 2.3 s, [FAIL 0 | WARN 2 | SKIP 0 | PASS 1]'](https://www.jumpingrivers.com/blog/end-to-end-testing-shinytest2-part-2/graphics/shinytest2-initial-snapshot-warning.png)

These indicate that some snapshot files have been saved within your

repository. The snapshots provide a ground truth against which

subsequent test runs are compared. For the test we’ve just written, the

snapshots are saved in the directory

./tests/testthat/_snaps/e2e-greeter_accepts_username/ as

greeter-001_.png and greeter-001.json and contain a picture of the

state of the app (in the .png) and any input / output / export values

that are stored by shiny (in the .json) at the point when

app$expect_values() was called.

Important: If you change the code for either your app, or your tests, you may have to update these snapshot files.

Rerunning your first test

We have written our first test case, and by doing an initial run, we have saved some snapshot files that contain our expectations for that test case.

If we now rerun the test using “Ctrl-Shift-T”, we see that the test passes, and we no longer see the warnings that arise when initially saving the snapshot files.

![The first successful snapshot test run. The console where the test was run, containing the text 'Loading shinyGreeter, Testing shinyGreeter, Loading required package: shiny, tick symbol | F W S OK | Context, tick symbol, | 1 | e2e-greeter_accepts_username [2.8s], Results, Duration: 2.8 s, [FAIL 0 | WARN 0 | SKIP 0 | PASS 1]'](https://www.jumpingrivers.com/blog/end-to-end-testing-shinytest2-part-2/graphics/shinytest2-first-passing-test.png)

Breaking your test and/or your app

We’ve got a passing test. Great!

Now let’s break it!

Sorry, what?

One of the purposes of these tests is to highlight when / where you’ve introduced some code that causes some important aspect of your app to break. So if we don’t know that the tests will fail when they should in an artificial setting, how can we expect them to fail when we need them to?

Let’s keep the snapshot files as they are and modify the test code:

# ./tests/testthat/test-e2e-greeter_accepts_username.R

test_that("the greeter app updates user's name on clicking the button", {

# ... snip ...

app$set_inputs(name = "Jumping Robots")

app$click("greet")

# ... snip ...

})

With the test case modified, it now fails when the tests are ran - printing the following message:

Failure (test-e2e-greeter_accepts_username.R:13:3): the greeter app updates user's name on clicking

the button

Snapshot of `file` to 'e2e-greeter_accepts_username/greeter-001.json' has changed

Run `testthat::snapshot_review('e2e-greeter_accepts_username/')` to review changes

# ... snip ...

Warning (test-e2e-greeter_accepts_username.R:13:3): the greeter app updates user's name on clicking

the button

Diff in snapshot file `e2e-greeter_accepts_usernamegreeter-001.json`

< before

> after

@@ 2,8 / 2,8 @@

"input": {

"greet": 1,

< "name": "Jumping Rivers"

> "name": "Jumping Robots"

},

"output": {

< "greeting": "Hello Jumping Rivers!"

> "greeting": "Hello Jumping Robots!"

},

"export": {

We would have got the same test-failure, error and a similar warning if

we had left the test-case unmodified and broken the app, by hard-coding

the app to bind “Hello Jumping Robots!” to output$greeting. You can

prove that for yourself, though.

If we look in the tests/testthat/_snaps directory, that test-failure

has led to two new files being added. We have greeter-001_.new.png and

greeter-001.new.json in addition to the snapshot files that were added

when the (correct) test-case was initially ran. The new files are the

output from the app for the broken version of our test.

We can compare what the app images look like after the two versions of the test using

testthat::snapshot_review("e2e-greeter_accepts_username/")

That opens a shiny app that allows us to update the snapshot files, should we wish to.

But the .json is a bit more interesting. This is the content for the original test-case:

{

"input": {

"greet": 1,

"name": "Jumping Rivers"

},

"output": {

"greeting": "Hello Jumping Rivers!"

},

"export": {

}

}

For the modified test-case, the input$name and output$greeting

values are modified.

As stated above, every input, output and export value that is

stored by shiny at the point when the snapshot was taken is present in

this .json file. For a large app, this will include many many things

that are irrelevant to the behaviour that a given test-case is

attempting to check.

Again, one of the purposes of your tests is to help identify when some new code breaks existing functionality. What you don’t want is for a given test to fail when the behaviour it is assessing works fine, but some disconnected part of the app has changed.

With {shinytest2}, you can ensure that you only look at the value for specific variables when making snapshot files. This can help make your tests more focussed and prevent spurious failures.

Here, we might rewrite the test to just check the value stored in

output$greeting:

# ./tests/testthat/test-e2e-greeter_accepts_username.R

test_that("the greeter app updates user's name on clicking the button", {

# ... snip ...

# Only check the value of output$greeting

app$expect_values(output = "greeting")

})

With this, the values stored in the .json are more specific to this test:

{

"output": {

"greeting": "Hello Jumping Rivers!"

}

}

Similarly, you probably don’t want a behavioural test to start failing

when the look and feel of the app changes. To prevent {shinytest2} from

using snapshot images you can call

app$expect_values(..., screenshot_args = FALSE).

Now that our expected values are more specific to the test case, we can update the stored snapshot files.

testthat::snapshot_review("e2e-greeter_accepts_username/")

If you find yourself having to update the snapshot files for your tests often, or you find that when you update these snapshot files, the behaviour that you were testing seems to be working perfectly, you should try restricting the set of variables that are being checked by your tests (or preventing the tests from making pictures of your app).

Summary

Here, we created a simple shiny application and showed how to record and run tests against this app using {shinytest2}. The source code for the application can be obtained from github.

The structure of a typical test case is similar to that used with {testthat} but you must start the app running before interacting with it or making any test assertions. The {shinytest2} recorder is able to generate test code to go inside these test cases, and we showed how to use the recorder with a packaged application. It is important to ensure that your tests are restricted to only make assertions about the behaviour of your application that is under scrutiny, so that your tests only fail when that behaviour changes.

The code generated here could be copied and modified to generate further test cases. Doing this might add lots of duplication across your test scripts making your tests harder to maintain. In the next post in this series, we add a further test to the application and introduce some design principles that help ensure that your test code supports, rather than hinders, the further development of your application.